November 19, 2025 ⏱️ 12 min

By Mădălin P. (RnD – Cloud Group)

If you are looking for a faster, easier way to deploy, test, and scale your applications without the infrastructure pain, then Serverless Framework is here to help.

It simplifies building and deploying cloud applications by letting you define your entire infrastructure, from APIs and databases to functions and containers, in a single configuration file. With just one command, you can deploy scalable, event-driven applications across AWS and beyond, without ever touching a server.

In this article, we’ll explore Serverless and Serverless Containers frameworks, when to use each, how to replicate the cloud environments locally and why this is becoming a good choice for scalable, efficient cloud applications.

Serverless Key Benefits

While the Serverless Framework is best known for managing functions (such as AWS Lambda), the Serverless team also provides the Serverless Containers Framework, a companion tool designed for teams deploying container-based workloads on services like AWS Lambda and ECS Fargate. Together, they enable developers to choose the best compute model for each workload, whether function-based or containerized, under a unified development experience.

One of the key benefits of Serverless is the ability to replicate cloud environments locally. This improves your team’s productivity and reduces cloud service costs during development. In addition to these advantages, the Serverless Framework provides a powerful set of features that streamline your workflow:

- Speed – Deploy complex architectures in minutes, not days.

- Scalability – Functions automatically with demand.

- Cost Efficiency – Pay only for what you use; no costs for idle servers.

- Infrastructure as Code (IaC) – Keep your infrastructure in version control for consistency and repeatability.

Basic Project Structure

A basic Serverless project targeting AWS typically includes:

1. Compute

- Functions: AWS Lambda functions (or equivalents in other cloud providers) triggered by events such as HTTP requests, S3 uploads, SQS messages, or by scheduled tasks.

- More? Functions and AWS ECS Fargate: With the help of the Serverless Containers Framework, teams can define and deploy container workloads with the advantage of switching between containers on lambda or on ECS, all in a unified configuration.

2. Events: Triggers that connect functions to cloud resources, including databases, storage buckets, and message queues, declared directly in the configuration.

3. Resources: Infrastructure components your application depends on.

Serverless Framework simple example of an API YAML configuration file:

service: aws-api-example provider: name: aws runtime: nodejs20.x region: us-east-1 functions: hello: handler: handler.hello events: - httpApi: path: /hello method: get resources: Resources: MyDynamoDBTable: Type: AWS::DynamoDB::Table Properties: TableName: my-table AttributeDefinitions: - AttributeName: id AttributeType: S KeySchema: - AttributeName: id KeyType: HASH BillingMode: PAY_PER_REQUEST

In many cases, you don’t need to explicitly define a resource for it to be created. For example, if a function is triggered by an SQS queue, the Serverless Framework can provision that queue automatically based on your configuration. This streamlines setup and reduces boilerplate, while still giving you the flexibility to customize or explicitly define resources when needed.

The Serverless Framework allows developers to describe essential infrastructure directly in their project configuration. Common AWS resources provisioned through the framework include:

- DynamoDB Tables for NoSQL storage

- S3 Buckets for file and object storage

- SQS Queues for message queuing and decoupling

- SNS Topics for publish/subscribe communication

- API Gateway for managing APIs

- IAM Roles and Policies for access control

- CloudWatch Alarms for monitoring and alerts

- VPC Configurations for networking and security

Cloud Agnostic Capability

As part of the Serverless Framework V4 release, support for non-AWS cloud providers has been officially deprecated. This includes legacy integrations like Azure, Google Cloud, and others.

While this may affect existing multi-cloud setups, it reflects a strategic shift toward rethinking how the framework supports alternative cloud environments. Rather than maintaining outdated implementations, the Serverless team is laying out the foundation for a more modern, robust, and scalable approach to multi-cloud support which will be coming in future releases.

Advanced IaC Capabilities

The Serverless Framework extends its IaC functionality with:

- State management – Tracks infrastructure state via Serverless Dashboard or state files, supporting safe incremental updates.

- Environment Variables and Secrets – Provides flexible environment-specific configurations, including secure secret management.

- Custom IAM Roles – Enforce the principle of least privilege with granular functions-specific permissions.

- Plugin Ecosystem – Encourage modular, DRY configurations through variables and file references.

- Nested Stacks – Allows large applications to be organized into smaller, maintainable components.

Serverless Containers Framework

While the Serverless Framework focuses on functions, many modern workloads, such as long-running APIs, background workers, or stateful services, are better suited for containers.

To address this, the Serverless team introduces the Serverless Containers Framework, an experience for deploying containers on serverless platforms, such as AWS Lambda Containers and ECS Fargate. It provides the same declarative simplicity and developer experience as Serverless Framework, but for containerized workloads.

Next, we will briefly describe the most relevant features of SCF.

Unified Container Development & Deployment:

- Deploy with ease to Lambda functions and ECS Fargate using one workflow

- Combine Lambda and Fargate compute within the same API

- Switch platforms instantly without code rewrites or downtime

- Get production-ready infrastructure in seconds with automated VPC, networking and ALB setup

Rich Development Experience:

- Build Lambda and Fargate containers quickly with full local emulation

- Route and simulate AWS ALB requests via localhost

- Accelerate development with instant hot reloading

- Inject live AWS IAM roles into your containers

Production-Ready Features:

- Smart code/config change detection for deployments

- Supports one or multiple custom domains on the same API

- Automatic SSL certificate management

- Secure AWS IAM and network defaults

- Load environment variables from .env files, AWS Parameter Store, AWS Secrets Manager, Terraform State

- Multi-cloud support coming soon

Configuration

Serverless Container Framework offers simple YAML to deliver complex architectures via a serverless.containers.yml file. Here is a simple example of a full-stack application:

name: acmeinc deployment: type: aws@1.0 containers: # Web (Frontend) service-web: src: ./web routing: domain: acmeinc.com pathPattern: /* compute: type: awsLambda # API (Backend) service-api: src: ./api build: args: # Optional: Arguments to pass to the build, as a map of key-value pairs. This is useful for passing in build arguments to the Dockerfile, like ssh keys to fetch private dependencies, etc. APP_VERSION: '1.0.0' # Example: Application version for labeling BUILD_DATE: '2024-01-15' # Example: Build timestamp dockerFileString: # Optional: The content of a Dockerfile as a string, to use for the container. Please note, this is not recommended for public use, and this is not where you specify the path to your Dockerfile. No config is required for specifying the Dockerfile path, simply put your Dockerfile in the root of the `src` directory. | FROM node:18-alpine WORKDIR /app COPY package*.json ./ RUN npm ci --only=production COPY . . EXPOSE 8080 CMD ["npm", "start"] options: # Optional: Additional Docker build flags (e.g. "--target production"). Accepts either a space-delimited string or an array of strings. '--label=version=1.0.0' # Example: Add metadata labels '--label=maintainer=team@company.com' # Example: Add maintainer info '--progress=plain' # Example: Show plain progress output routing: domain: api.acmeinc.com pathPattern: /api/* pathHealthCheck: /health compute: type: awsFargateEcs awsFargateEcs: memory: 4096 cpu: 1024 environment: HELLO: world awsIam: customPolicy: Version: '2012-10-17' Statement: - Effect: Allow Action: - dynamodb:GetItem Resource: - '*' integrations: # Slack notifications for API errors error-alerts: type: slack name: 'API Error Alerts'

In the Serverless Container Framework (SCF), there are currently three ways to provide the docker image for your API:

- You can define a Docker inline in the serverless.container.yml using the dockerFileString property, although this is generally not recommended for production use.

- You can create a standard Dockerfile inside your source directory (where the source code is located) and reference it into the YAML file as src. SCF will automatically detect this as a Dockerfile and use it when building the image.

- If you don’t provide a Dockerfile, SCF can automatically generate an image for your project based on the runtime detected from standard dependency files, package.json for Node.js projects or requirments.txt for Python projects, so you can run the code without manually writing a Dockerfile. The automatically generation is not yet supported for other runtimes. However, the SCF works with any language as long as you provide a valid Dockerfile.

This flexibility allows developers to either fully customize the container or rely on SCF’s built-in runtime images for rapid development.

When to Use Which

Depending on the use case you will have to decide between Serverless Framework that is aimed to functions and favors an easier deployment and Serverless Containers that is more flexible with the compute allowing you to use containers for this purpose.

| Scenario | Use Serverless Framework | Use Serverless Containers Framework |

|---|---|---|

| Short, event-driven functions | X | |

| Long-running processes | X | |

| High-memory or CPU tasks | X | |

| Mix of both | X | X |

| Gradual migration from traditional containers | X | |

| Lightweight APIs or event handlers | X |

Serverless Dashboard

The Serverless Dashboard is a powerful command center designed to simplify how teams build, manage, and monitor their serverless applications, bringing clarity, governance, and confidence to your serverless operations.

With its intuitive web-based interface, the Dashboard transforms raw cloud complexity into actionable insights. It gives developers, operators, and product teams a shared space to track deployments, monitor application health, enforce security, and collaborate seamlessly.

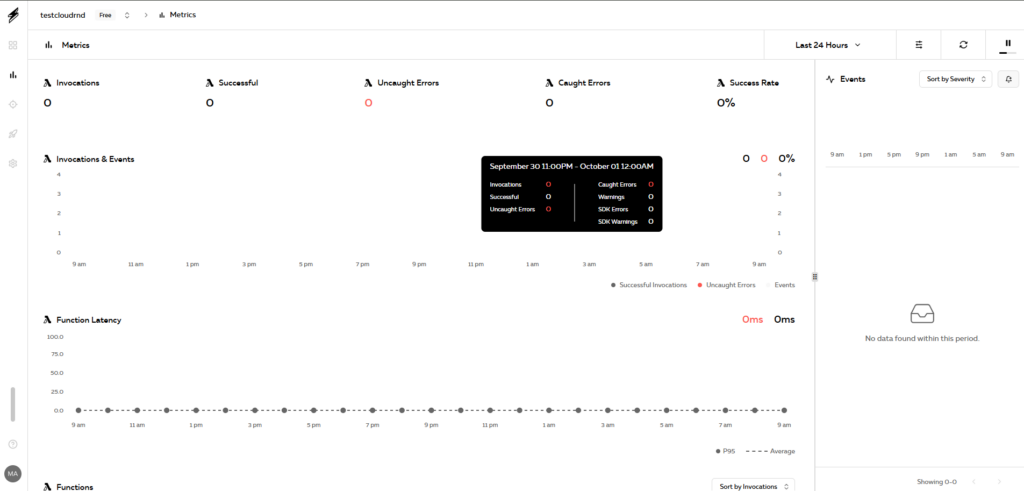

Real-Time Monitoring

Real-time monitoring lets you track function invocations, execution duration, and errors without having to set up manual CloudWatch queries.

CI/CD Integrations

CI/CD integrations allow you to automate deployments directly from GitHub, GitLab, or Bitbucket.

Secrets management

Secrets management enables you to control access, review deployment history, and coordinate changes within a team environment.

Alerts & Notifications

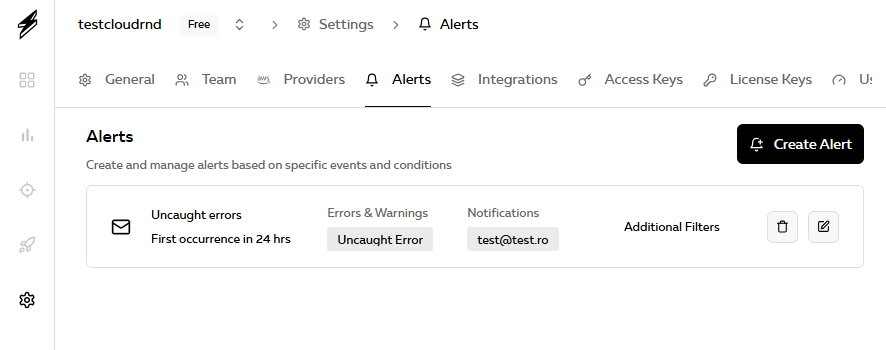

Alerts and notifications keep you informed about errors, performance issues, and usage spikes.

The dashboard integrates tightly with the CLI, meaning you can manage deployments from your terminal while still benefiting from rich visual insights online.

Pricing

The Serverless Frameworks themself are open-source and free to use for deploying services. However, the Serverless Dashboard uses a flexible credit-based pricing model:

- Pay-As-You-Go – Pay only for the Credits you use monthly, no long-term commitment and scale the usage up or down as needed.

- Reserved Credits – Reserve Credits in advance for large discounts.

Credit Value breakdown:

- Service instances – An instance is represented by a serverless.yml file that is deployed within a “stage” and “region” for more than 10 days per month. The Serverless Framework charges 1 Credit for each Service Instance.

- Traces – A Trace captures a single AWS Lambda invocation in Serverless Dashboard and its errors, spans, logs, and can be viewed by the Trace Explorer and Alerts to troubleshoot. (1 Credit per 50 thousand Traces).

- Metrics – A metric is a performance metric on AWS Lambda invocations in Serverless Dashboard. Each AWS Lambda invocation generates 4 Metrics. (1 Credit per 4 million Metrics).

Local Development & Testing

Local development tools are essential for building serverless applications efficiently. The Serverless Framework offers various plugins to emulate cloud services on your local machine, reducing reliance on cloud deployments during development while Serverless Containers Framework has its own built in local emulation.

- Serverless-Offline

Serverless-offline simulates AWS Lambda and API Gateway, allowing developers to invoke functions locally through HTTP requests. It supports fast testing, hot reloading, and multiple runtimes such as Node.js, Python, Java, and Go.

- Serverless-DynamoDB

Serverless-dynamodb provides a local DynamoDB instance for development and testing without connecting to AWS. It supports Java- or Docker-based setups and integrates with serverless-offline to create a full-featured local environment.

- Serverless-S3-Local

Serverless-s3-local emulates S3 buckets on a local machine, enabling teams to test file uploads, downloads, and bucket configurations. It includes features like CORS support, HTTPS, and customizable server settings.

- Serverless-Offline-SQS

Serverless-offline-sqs allows developers to simulate AWS SQS queues locally using ElasticMQ. This enables event-driven testing of Lambda functions triggered by queue messages without needing AWS access.

- Serverless-Offline-SNS

Serverless-offline-sns provides local emulation of AWS SNS topics, allowing developers to publish messages and trigger Lambda functions without deploying to AWS. It supports automatic topic creation and customizable endpoints.

- Serverless-LocalStack

Serverless-localstack integrates the Serverless Framework with LocalStack, a comprehensive local AWS cloud emulator. It allows developers to spin up local versions of services like Lambda, S3, DynamoDB, and more, creating a near-production testing environment. LocalStack also offers optimizations such as mounting local Lambda code for faster development iterations.

Conclusion

The Serverless ecosystem simplifies building, testing, and deploying modern applications. From defining infrastructure as code to running full cloud stacks locally, it saves time, reduces costs, and enables scalable architectures without server headaches.

Whether you’re starting fresh or migrating existing apps, the Serverless Framework or Serverless Container Framework is a powerful ally for faster delivery and happier developers.